Appearance

Logging

This section provides an overview of the tools and their configurations for interacting with infrastructure and game server logs.

Appearance

This section provides an overview of the tools and their configurations for interacting with infrastructure and game server logs.

Target Audience

This page is primarily intended for users on Private Cloud plans. If you are using Metaplay Cloud with a Pre-launch/Production plan, information from this page may not be directly relevant to your needs.

The main tools we use are:

Logging is a broad subject, and in the context of running Metaplay-based game servers and supporting infrastructure, we can roughly categorize sources of log data into the following categories:

Each of these sources behaves slightly differently, and utilizing and capturing logs is handled by default differently. For example, many AWS services themselves can be configured to publish logs to AWS CloudWatch Logs, where the logs can be persisted or consumed from. As a practical example, AWS EKS control plane logs are published to CloudWatch Logs in this way. In practice, for many AWS services, we not only do not need to, but often cannot actually influence that much in how the logs are gathered.

On the other hand, most components of the Metaplay stack run as Docker containers on top of EKS. This means that container logs are generated and exist mainly in the context of the underlying Kubernetes node, which runs those containers. In our case, these hosts are often AWS EC2 instances. We leverage Promtail, which runs on each host as a Kubernetes DaemonSet, to monitor the host's /var/log directory and capture all logs generated from containers on the given host. Promtail can be used to additionally decorate each gathered log row with metadata, such as which Kubernetes pod and which container in the pod generated the log row. Collecting this type of data allows easier consumption of logs further down the line.

In practice, we prefer to collect Kubernetes logs in this fashion as it means we do not need to customize the log collection per service, but instead can easily collect logs of all containers, be they long-running services or ephemeral jobs, in this fashion.

Once logs are gathered, we by default aggregate them to two different locations:

Functionally, log aggregation allows us to define a central location where the log data is collected, which solves issues like how the log data is persisted and how it can be interacted with or queried.

As discussed above, CloudWatch Logs offers a logical location for us to aggregate AWS-native logging data. We attempt to segregate services into different CloudWatch Logs log groups based on logical components (e.g., EKS logs go to a different log group than Lambda function logs), and within those log groups, we attempt to split logs into log streams based on further logical groupings within the component (e.g., within the EKS log group, different log streams exist for the Kubernetes API server, authentication service, controller manager, etc.).

For logs originating from Kubernetes nodes via Promtail, we by default use Grafana Labs' Loki as the aggregator. Our current design runs Loki in-cluster and uses AWS S3 for the persistence of log files. Unlike CloudWatch Logs, which is an AWS PaaS, Loki requires more operational caretaking, especially in large deployments, and the later parts of this document will outline various strategies available to help with scaling Loki up if log volumes increase.

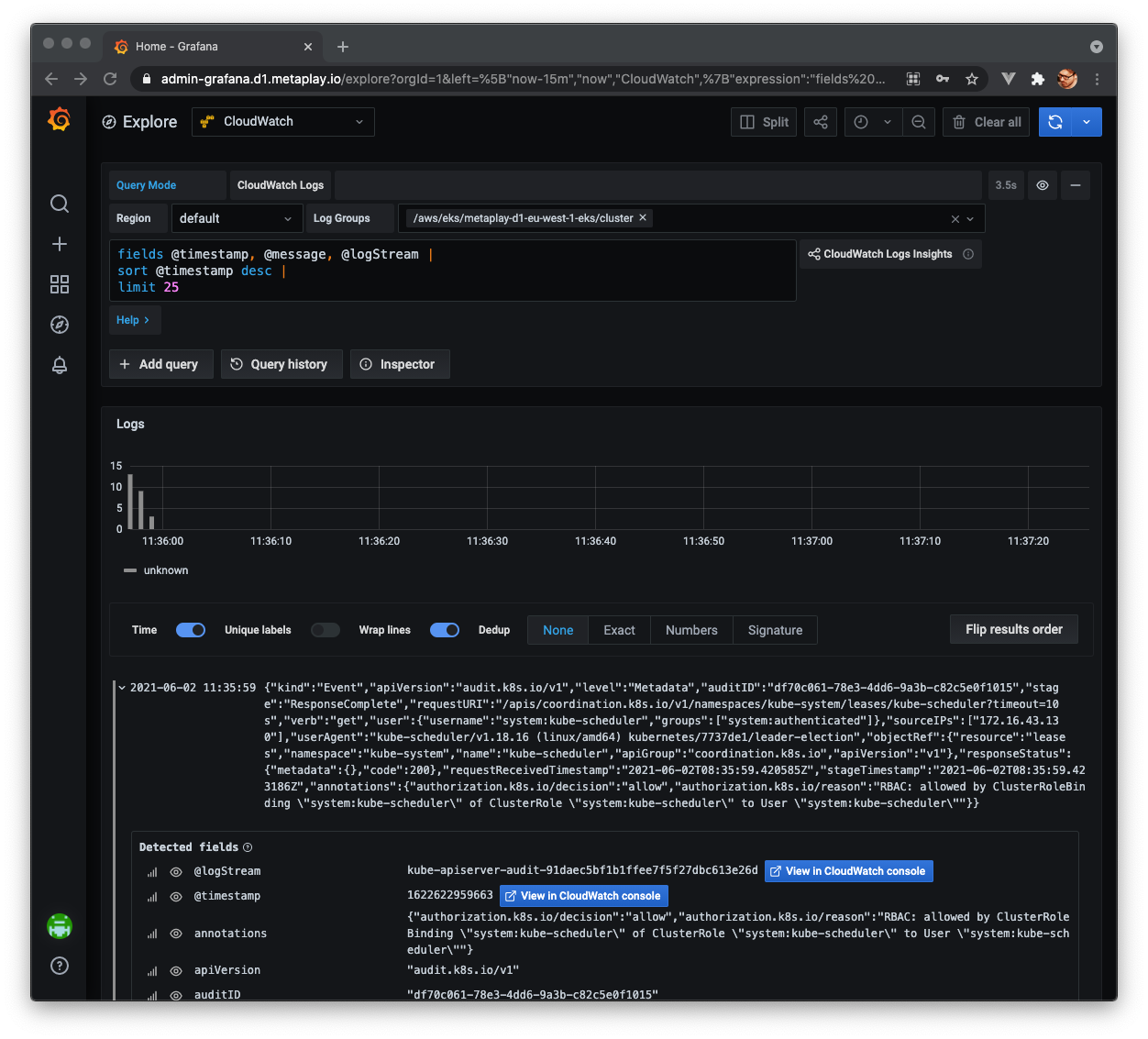

As an end user of logs, regardless of whether you are a game server developer or an operations engineer looking after the infrastructure, our base design places Grafana as the central source of not only metrics but also log data. You can think of Grafana as a lens to these different troves of data and offers a unified location for you to query logs from or even create dashboards based on log analysis.

Each log aggregator has a slightly different format for querying data. Listed below are links to resources for getting acquainted with querying Loki using LogQL or AWS CloudWatch Logs using the CloudWatch Logs Insights queries:

Assuming that you manage your infrastructure through the Metaplay-provided Terraform modules, configuring a basic logging setup is relatively straightforward. The environments/aws-region module README is a good place to get started with to understand which parameters are available to you. That said, to get started, we can configure our environment as follows:

module "infra" {

source = "git@github.com:metaplay-shared/infra-modules.git//environments/aws-region?ref=main"

# Snip, your other configurations are here...

promtail_enabled = true

loki_enabled = true

loki_aws_storage_allow_destroy = true

s3_name_random_suffix_enabled = true

}The above example explicitly enables both Promtail and Loki (they are enabled by default, so technically they would not need to be called out). Additionally, we allow the creation of an S3 bucket that Loki can persist log data on. This allows for easy storage of larger amounts of log data than if the data was only stored ephemerally inside the cluster's own storage. We additionally flag loki_aws_storage_allow_destroy to allow for Terraform to clear the contents of the Loki S3 bucket when terraform destroy is run. This is convenient for development environments but should be set to false in production environments where you do not want to destroy historical log data, even accidentally. Finally, we explicitly enable s3_name_random_suffix_enabled to avoid the risk of duplication in S3 names, which should be globally unique across all AWS accounts.

With the above setup deployed and in play, you can already access container logs via Loki using Grafana's Explore section and selecting Loki as the data source.

If the volume of your game grows, it is possible to hit the limits of the above basic setup with Loki quite easily. Typically, this will manifest itself as Loki potentially becoming unresponsive when being queried or the general load of the cluster being significantly increased.

In these cases, we can apply a couple of different tactics to allow for Loki to scale up:

The above tactics 1-4 can be implemented via the Terraform module using the following configurations:

module "infra" {

source = "git@github.com:metaplay-shared/infra-modules.git//environments/aws-region?ref=main"

# Snip, your other configurations are here...

promtail_enabled = true

loki_enabled = true

loki_aws_storage_allow_destroy = true

# tactic 1

loki_replicas = 2

# tactic 2

loki_reader_replicas = 3

# tactic 3

loki_queryfrontend_enabled = true

loki_queryfrontend_replicas = 1

# tactic 4

loki_shard_pool = "logging"

loki_reader_shard_pool = "logging"

loki_queryfrontend_shard_pool = "logging"

shard_node_groups = {

# Snip, your pre-existing shard node group configurations, make sure that all pool spec keys exist...

logging = {

instance_type = ["c5.large"]

desired_size = 3 # initial node group size of 6 (= 3*length(logging.azs))

min_size = 1

max_size = 10

autoscaler = true

azs = ["eu-west-1a","eu-west-1b"]

}

}

}Tactic 4 of separating the Loki pods to a separate Kubernetes node group is especially wise in production environments to limit the blast radius and safeguard the operations of the rest of the cluster even if Loki gets overwhelmed. In the example above, we have defined a new shard node group named logging, which is guaranteed to not run any other pods than the ones that explicitly request to be run in the group. Using the shard_pool parameters, we tell which Loki components we want to schedule there. We also enable the autoscaler to manage the node group for us, which makes it easier in the future to just adjust the replica numbers as needed since the node group will dynamically adjust accordingly.

On a very technical level, Loki is being deployed by the Terraform module using the metaplay-services Helm chart. The chart is designed to prefer Loki pods to be scheduled away from each other, if possible. This will mean that as long as there is a sufficient amount of underlying nodes available, Kubernetes will attempt to distribute the pods correspondingly away from each other.

DANGER

This approach is still slightly experimental, so beware of dragons!

Tactic 5 is an alternative to tactic 4, achieving fundamentally the same thing but using the AWS EKS Fargate profile approach to allow the Kubernetes scheduler to schedule Loki pods to run on Fargate instead of on the EC2 nodes. The benefit of this is that there is no need to mess around with underlying node infrastructure. The downside is that Fargate-based pods are charged separately based on Fargate pricing and tend to be typically more expensive than running pods on EC2 nodes.

That said, we can adjust the configuration of Loki as presented below to target deployments into Fargate:

module "infra" {

source = "git@github.com:metaplay-shared/infra-modules.git//environments/aws-region?ref=main"

# Snip, your other configurations are here...

promtail_enabled = true

loki_enabled = true

loki_aws_storage_allow_destroy = true

# tactic 1

loki_replicas = 2

# tactic 2

loki_reader_replicas = 3

# tactic 3

loki_queryfrontend_enabled = true

loki_queryfrontend_replicas = 1

# tactic 4

loki_shard_pool = "logging"

loki_reader_shard_pool = "logging"

loki_queryfrontend_shard_pool = "logging"

# tactic 5

fargate_profiles = {

loki = {

namespace = "metaplay-system"

labels = {

app = "loki"

release = "metaplay-services"

}

}

loki-reader = {

namespace = "metaplay-system"

labels = {

app = "loki-reader"

release = "metaplay-services"

}

}

}

}What we specifically do here is tell the AWS EKS scheduler that any pods which are in the configured namespaces and have the corresponding labels should be scheduled to corresponding Fargate profiles. In our case above, we know that our Loki pods tend to have the app label of either loki or loki-reader, and that we have a release label with the value of metaplay-services. We additionally know that the pods will exist in the metaplay-system namespace. Putting all these together allows us to target specifically these pods for Fargate execution.

Note that if you apply this configuration change to an already existing cluster, you may need to kill the individual Loki pods to get them to be rescheduled to Fargate if they are already currently running on EC2 nodes.