Appearance

Implementing Experiments

Experiments are a tool to conduct controlled studies of how changes to your game configs might affect your players.

Appearance

Experiments are a tool to conduct controlled studies of how changes to your game configs might affect your players.

The first thing to do before starting to build an experiment is to decide what you want to test, how you will test it, and how to interpret the results. A great way to do this is to develop a solid hypothesis that clearly states what you think the outcome of the change will be. A good hypothesis should answer the question, "What are we hoping to learn from this experiment?"

💡 Remember

Your experiment may not always give the expected outcome, but you should always try to construct it in such a way that you can learn something from the results, regardless of the outcome.

Using Metaplay's Idler sample, imagine a situation where players are quickly bouncing from your game and you believe that this is because the game progression is too slow. You might want to see if making the early-game progression faster will improve the early player retention rate. An example hypothesis for this might be: "If we make the first three producers cheaper, then the day one retention rates will increase because players enjoy progressing further." Based on this hypothesis, we know what to change and what metrics to track to conduct the experiment.

📋 Plan Your Experiments

You could use another framework for defining your experiments, or you could use no framework at all. But whatever you do, make sure that you understand how to interpret the results before you start!

Once you have a good hypothesis, deciding on your Variants should be easy. Remember that the Variants are modifications from your existing game config. In the simplest scenarios, a single Variant should change one thing only, and a set of Variants should change the same thing but in different ways.

For example, in the above scenario, we might define a single Variant:

Or we might want to go further and produce two Variants:

📊 About Multiple Variants

While an experiment in the Metaplay SDK can have more than one Variant, it's often a good idea to stick with just one - it's much easier to achieve statistical significance and analyze the attribution of your results when you make fewer changes at once.

Now that we have decided on our hypothesis and our Variants, we can move on to defining them in our game config. As an example, we will use the hypothesis that we developed earlier on for testing early-game progression in Idler. The game config in Idler is defined using Google Sheets, and in general, the experiment data would be entered into the sheets by a game designer.

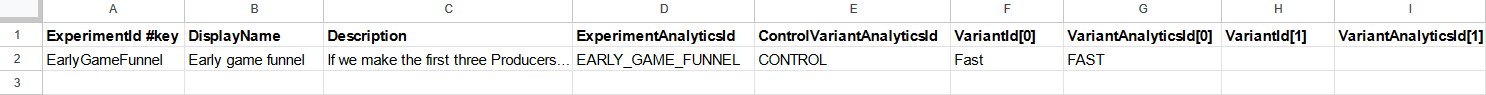

First, we'll set up our experiment. In Idler's config sheet, we'll add an entry for our new experiment in the experiment sheet.

✅ Good to know

We'll cover Analytics IDs later in this document.

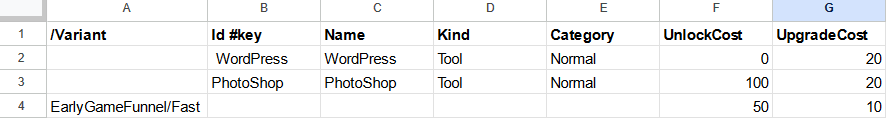

Next, we'll create a Variant. To override a config value, simply add the ExperimentId to the /Variant column and fill in the ConfigKey and whichever values you want to override.

You can modify as many config items and values as you like. Just make sure the experiment doesn't change too many things at once to keep your data analyst happy!

Note that you can also define the Variant row anywhere in the sheet, as long as you add the ConfigKey correctly.

In case many of your variants share the configuration for a particular row, you can also list multiple variants as a comma-separated list in the /Variant column to reduce the number of rows in your sheet!

The first time you're setting up experiments in your project, remember to define the PlayerExperiments library in ServerGameConfig. That may also be the first time you define the ServerGameConfig class in the first place.

public class ServerGameConfig : ServerGameConfigBase

{

[GameConfigEntry(PlayerExperimentsEntryName)]

public GameConfigLibrary<PlayerExperimentId, PlayerExperimentInfo> PlayerExperiments { get; private set; }

}Now you can build and publish your new game config, as shown in Working with Game Config Data.

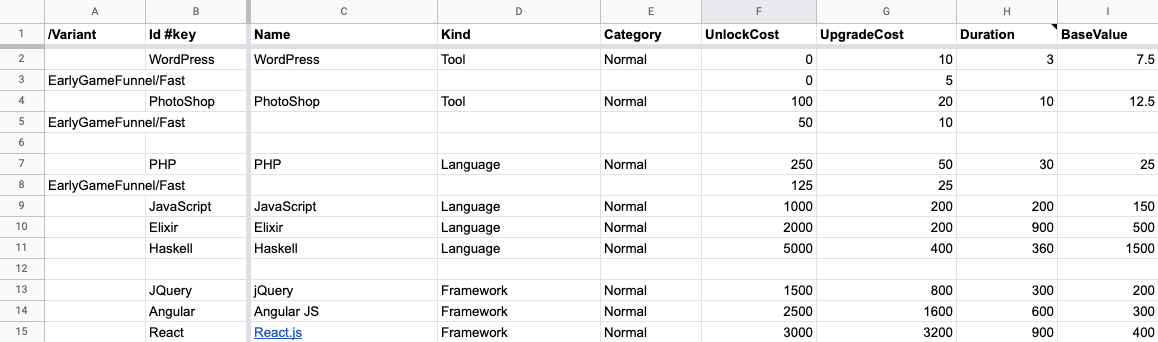

The SDK also supports defining the variants on the rows directly under the affected item, as such:

⚠️ Heads up

Remember that publishing a new game config with an experiment doesn't change anything for existing players yet - all it does is define a new experiment and Variants for later rollout.

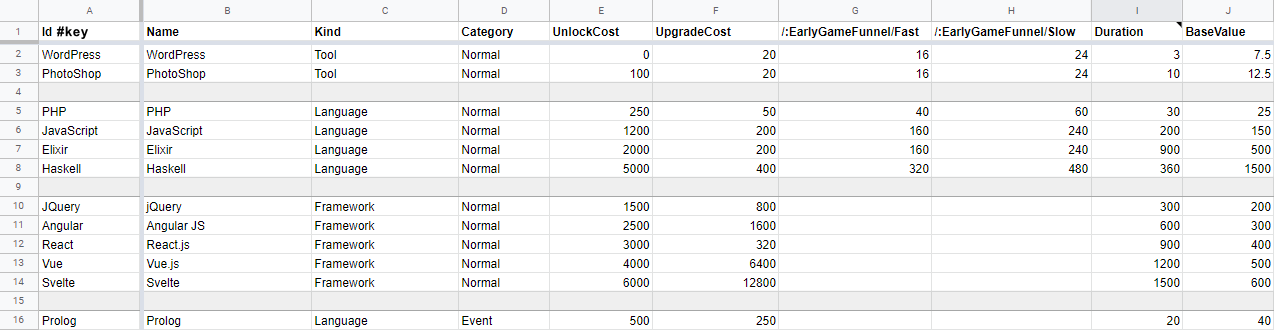

When running experiments involving changing a small number of values of a large number of config rows, it is often more convenient to describe the Variant in the spreadsheet by using Variant columns.

Modifying our previous experiment scenario, let's say that we'd like to only experiment with the UpgradeCost property but modify the values of all Producers up to Haskell. Our experiment plan would then be:

We could naturally declare the variants in the same fashion as before, but now our sheet would contain more Variant rows than regular rows, and maintaining the sheet would start to become cumbersome. Using Variant columns instead, our sheet is transformed to look like this:

Variant columns are declared by the special header column prefix "/:". A Variant column contains override values for the first regular column on its left, and as in the example, multiple variants can be declared for a single property by separate Variant columns. Similarly to Variant rows, empty values in the column result in the property for the row not being overridden.

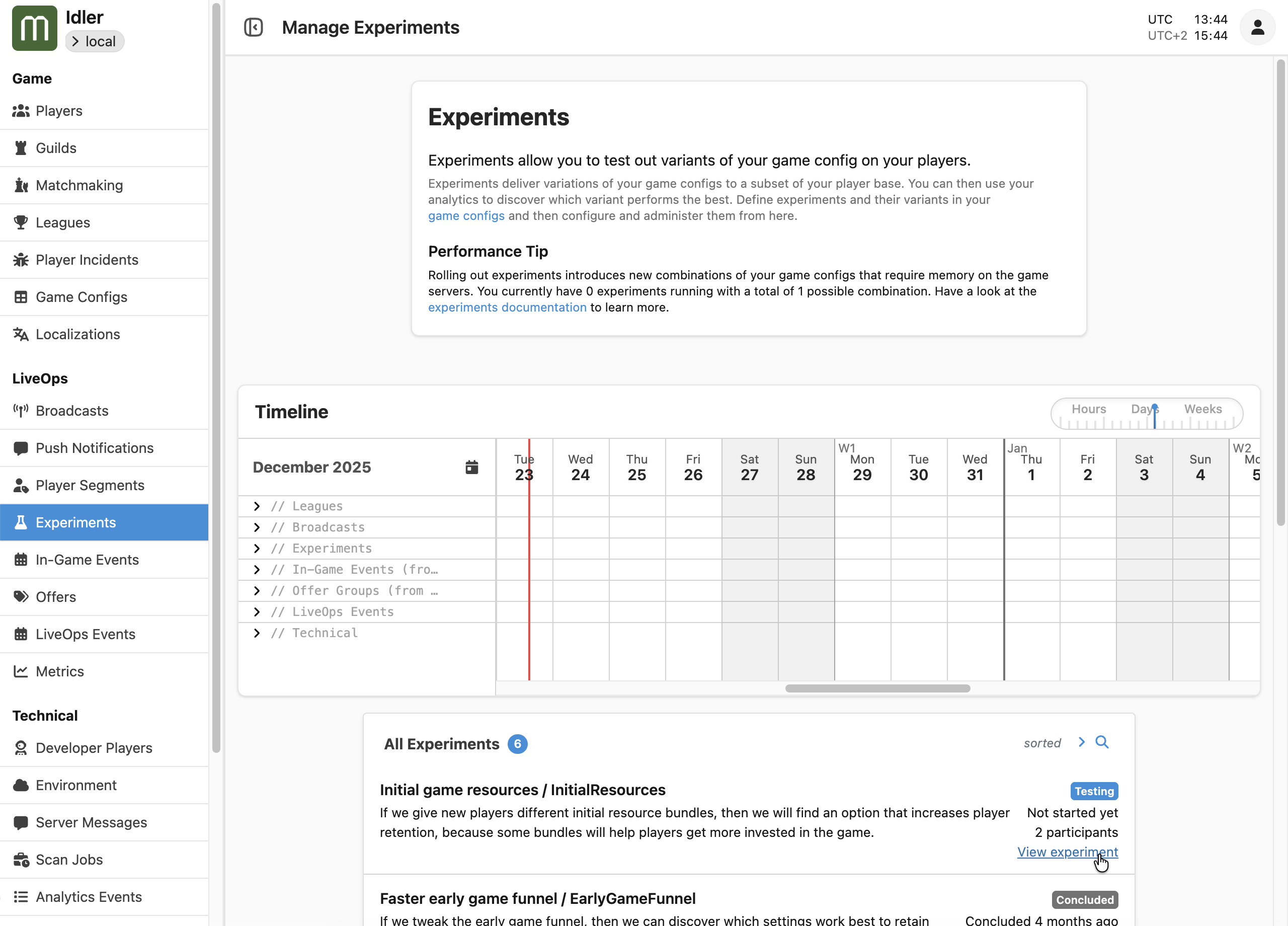

Once your experiment has been defined in a game config and the same game config has been published, you can start using the LiveOps Dashboard to interact with it. First, let's navigate to the experiments page:

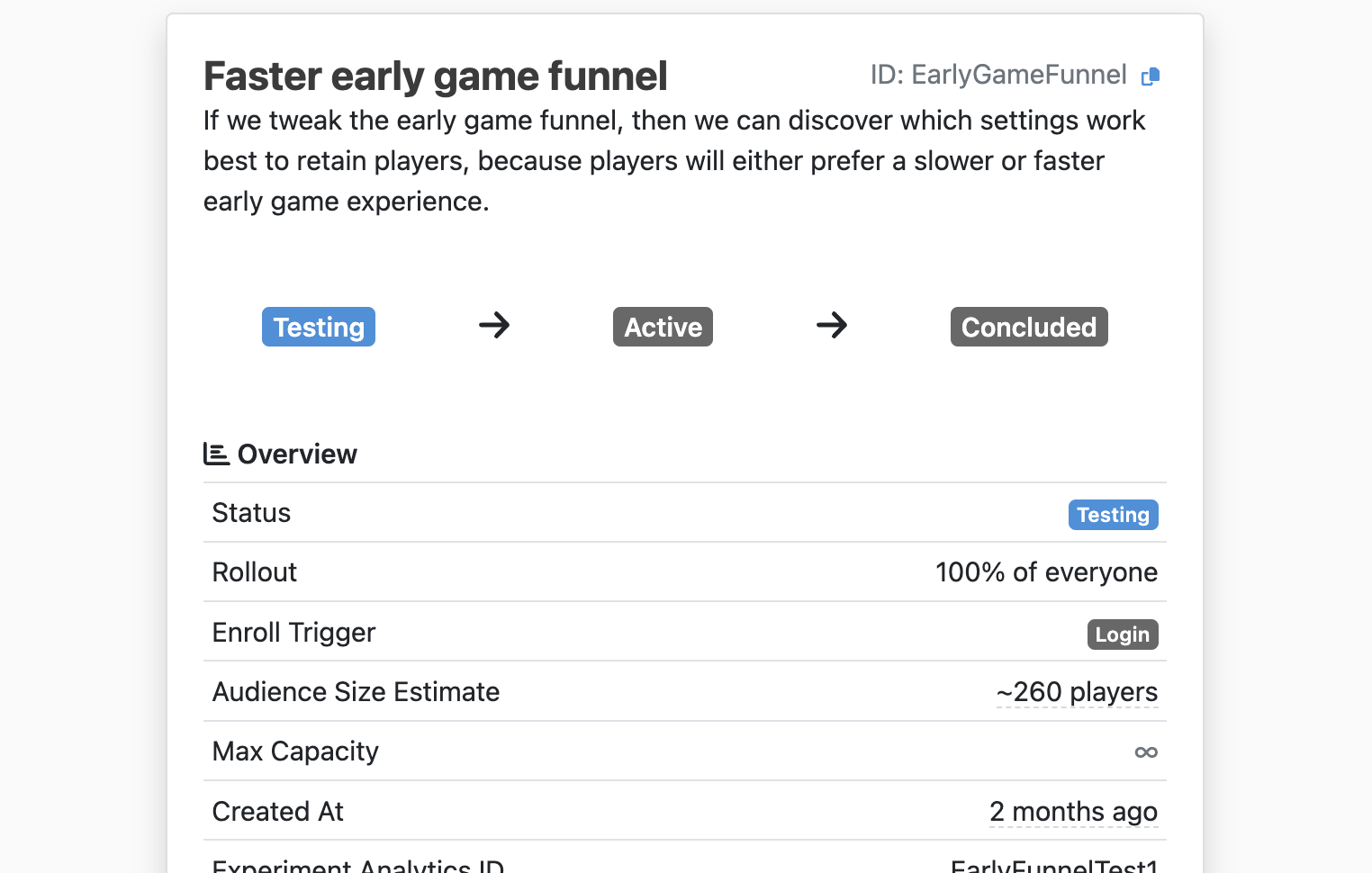

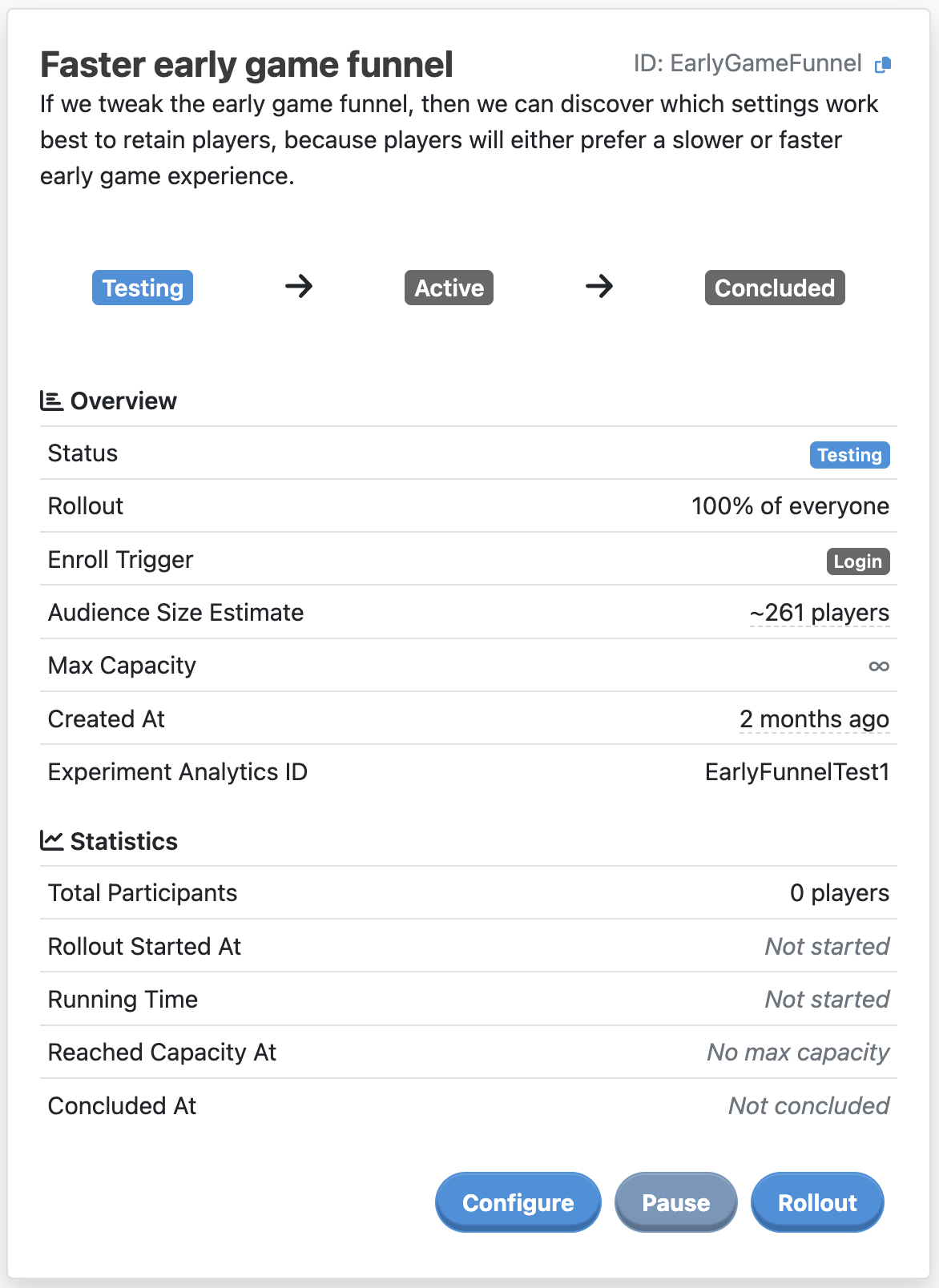

Click on View experiment to take you through to a page containing more detailed information:

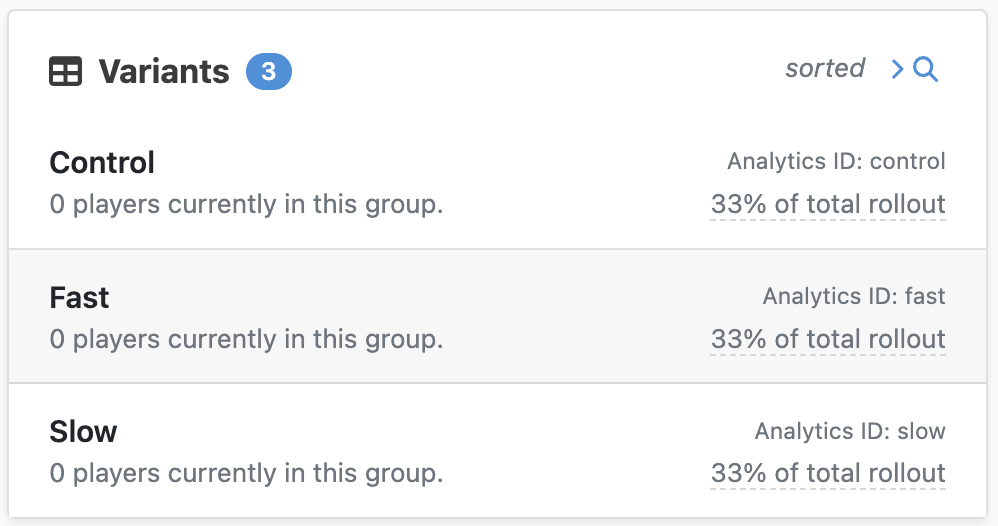

The main part of this page is the overview card, which shows you the current status of this experiment and lets you control it - we'll cover this in the next section. For now, we can focus on the Variants card:

Now is a great time to check that your Variants are configured the way that you expect them to be. By clicking on View config diff, you can examine the differences between a Variant and the game config that it is based on. If something's not quite right, you can update the Variant configuration in the game configs and rebuild. Once you're happy with the data, it's time to move on to running the experiment.

At this point, the experiment and its Variants have been created and configured correctly by the game's designer - the experiment is almost ready to go! In this section, we'll walk through the process of running an experiment through to conclusion. This would usually be done by someone in the LiveOps team, but in a smaller team that might well be the same person as the designer of the experiment.

We introduced the lifecycle model earlier on in this document. Now we'll look at each one of those phases in more detail, and we'll do all of that from the experiment overview card on the detail page of the LiveOps Dashboard. Remember that the lifecycle looks like:

Testing → Active → Concluded

The experiment is in the testing phase - it is not visible and has no effect, except for Tester players.

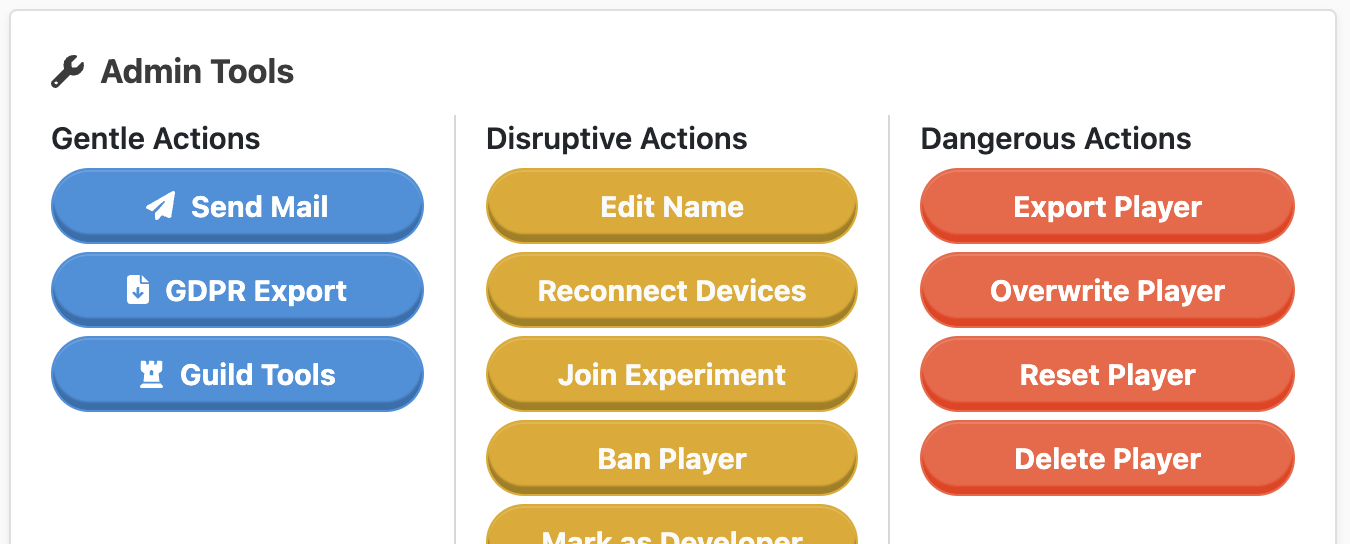

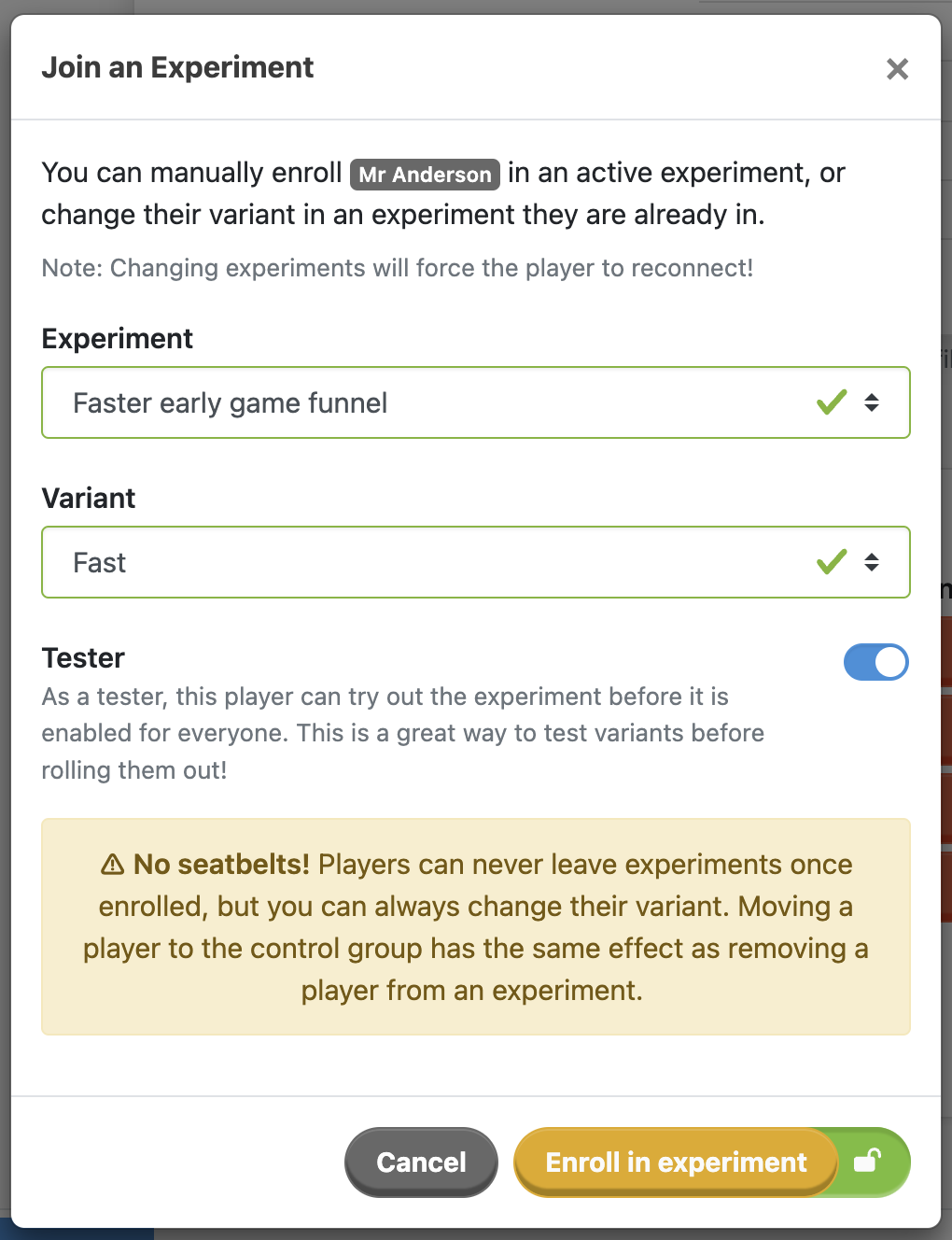

This is a great time to test out your Variants on some hand-picked players - maybe accounts owned by your test department. You can do this by navigating to a player's page and using the Join Experiment button in the admin tools section, and enabling the Tester switch.

If testing reveals any problems with the Variants, now is the time to go back to the sheets to get them right. Take as many iterations as you need to get things just right before you roll the experiment out to your players.

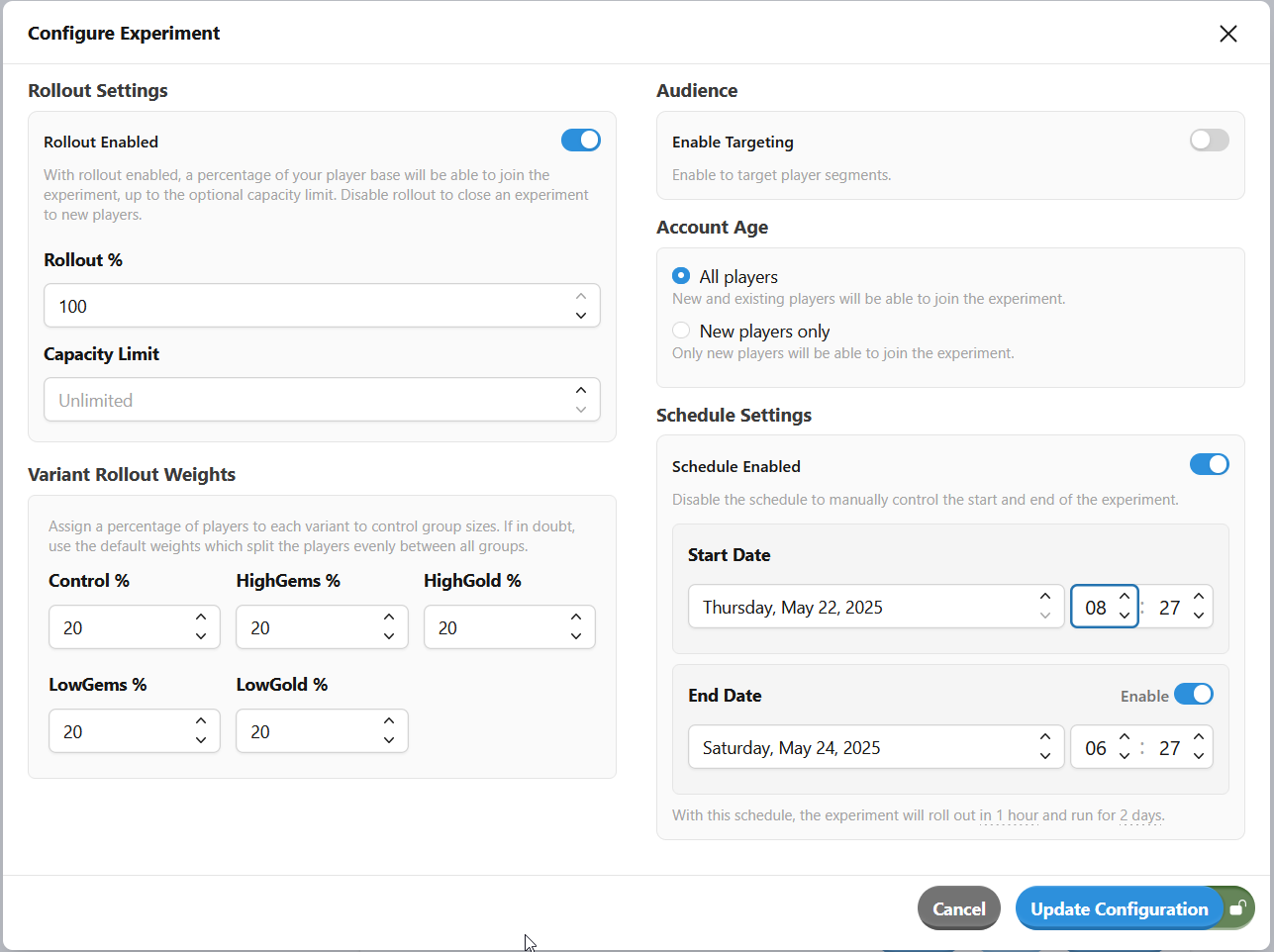

Once you are happy that the experiment and its Variants work as intended, you can configure the experiment rollout parameters by clicking on Configure.

In the Configure Experiment for Testing modal, you can configure an experiment's rollout settings and audience. Together these define how much of your player base will participate in the experiment. Additionally, you can adjust how the participating players are distributed across the variants.

⚠️ Heads up

You can edit the experiment in any phase, but you probably shouldn't. Once the experiment is rolled out, making changes may make it hard to understand the analytics data.

After you're happy with the configuration, you can roll it out immediately by hitting the Rollout button or you can configure a schedule to automatically transition the experiment to the Ongoing phase at a specific time.

Now your experiment is live and players are starting to see the Variants. The Statistics panel shows you how many players are in your experiment. More detailed rollout info is also available in the Variants card.

Once your experiment has been running long enough to gather all the analytics data that you need, you can hit Conclude to end it or, if you've configured a schedule, it'll automatically end at the specified time. If you need to temporarily stop players from seeing an experiment (maybe you spotted a mistake in one of the Variants?) you can click Pause.

The experiment is temporarily suspended. Enrolling new players is disabled, and all non-Tester players will no longer see the experiment. For the Testers of this Experiment, however, the experiment is still active.

This is useful, for example, if an unexpected bug causes a problem in a production environment. If you need to update your game config to fix the issue, then you can, and only your Tester players will see the changes - as long as the experiment remains Paused.

Once you have fixed the temporary issue with the experiment and are satisfied with the updated configuration, you may now click Continue to resume the experiment and go back to the Active phase. Alternatively, you could also click Conclude to end the experiment immediately.

Now the experiment has finished - it's time to go and look at the results from your analytics pipeline. At this point, all of the players (including the Tester players) in the experiment will start seeing the base game config again.

If you concluded an experiment by accident, you can use the Restart button to put it back into the Testing phase, and from there you can roll out the experiment again.

You've launched your experiment into action! In the rest of the chapters, we'll go over various good-to-know information about experiments. If you're planning to run more than one experiment at a time, be sure to also check out Optimizing Experiments.

Players are constantly generating analytic events while they are playing your game. When they are in an experiment, these events are tagged with metadata about the experiment. You can measure the impact of variants on players' behavior by analyzing and comparing them against players in the control group.

Metaplay is not a data analysis platform, but instead, it allows for integrating with existing 3rd party and custom solutions. For the Experiments, this is enabled by providing the Analytics IDs we defined in the game config to the Analytics solution.

To integrate Experiment Analytics Events with your Analytics solution, see Analytics Processing.

An experiment has a failure mode, which is triggered if the Experiment Config is changed in an incompatible way while the experiment is running. The cases are as follows:

Removed Experiment Config: If the experiment is removed from the game config in a non-Concluded phase, the whole experiment is marked as invalid until the config is reintroduced. An invalid experiment is not visible to any player, including Testers, and it has no effect.

Removed Variant Config: If a Variant is removed from the Game Config, the Experiment will remain running, but the removed Variant is marked as invalid and the system behaves as if the Variant did not exist. This means that players who have been assigned to this Variant will instead see the base game config. Additionally, the assignment weight of the Variant behaves as if it were set to 0, preventing any new players from being enrolled into the Variant. The weights of the other Variants will be adjusted accordingly.

When the Variant is reintroduced into the Config, the effective weights are restored and players in the Variant start seeing the Variant config again.

No Variant Groups: If the experiment has no valid Variants (i.e., it effectively consists only of a Control Group), then it is marked as invalid and disabled. The experiment is not visible to any player, and it has no effect. When Variant groups are reintroduced, the experiment becomes valid again.

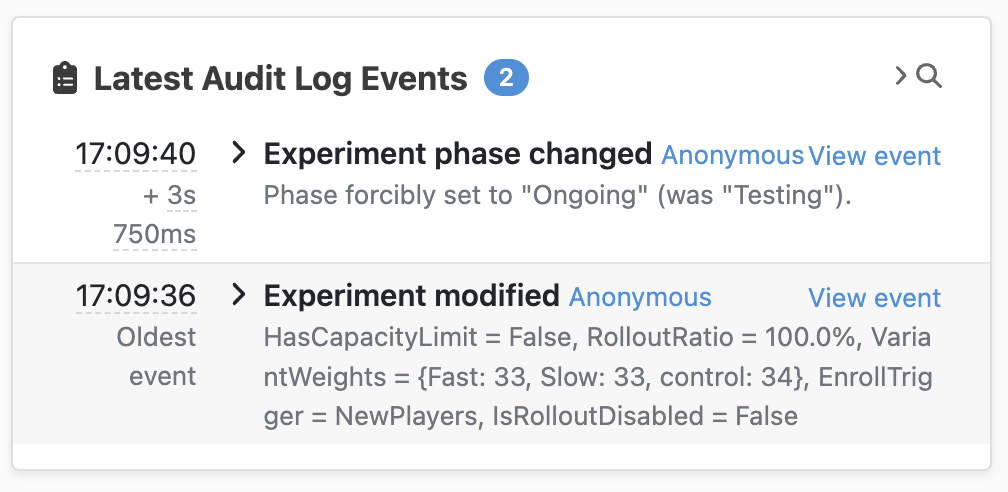

It's good to know that whenever you make changes to an experiment through the LiveOps Dashboard, an audit trail is left behind. This makes it easy to track changes over time. The trail for an individual experiment can be seen in the admin section of each Experiment's detail page:

For more information on audit logs, see LiveOps Dashboard Audit Logs.

PlayerModel.GameOnInitialLogin rather than the PlayerModel constructor, as the construction happens before processing the login and building the specialized config.⚠️ Heads up

Since the server has to keep track of all active game config specializations, having many variants on the same segments can cause increased memory usage. It is therefore recommended to keep an eye on the memory usage statistics when enabling new experiments. See Optimizing Experiments for more optimizing tips.

Unfortunately, not everything in the game configs is safe to experiment with. The current limitations include:

Activables (for example, in-game events and offers) are currently not supported. As a workaround, we recommend creating multiple versions of your activable and using the targeting tools to separate them into parallel Audiences. In practice, this creates a natural experiment.If you're an advanced user of experiments (i.e., you're planning to run multiple experiments at the same time), be sure to check out Optimizing Experiments.