Appearance

Configuring Cluster Topology

This page describes how Metaplay clustering works and how to customize the topology for your game.

Appearance

This page describes how Metaplay clustering works and how to customize the topology for your game.

A Cluster is a logical set of game server Nodes that are configured to communicate with each other and which, as a group, make up a scalable backend for a game. The Cluster is a logical concept and may span over multiple Kubernetes Clusters, each in a different region of the world.

A Cluster Node (or just Node) is an instance of the game server running as part of the Cluster. The Cluster Nodes are a logical concept of the game server and each Cluster Node maps to a single Kubernetes pod (when run in the cloud), but multiple Cluster Nodes may be scheduled to run on the same physical EC2 compute instance.

Metaplay achieves backend scalability by distributing the full workload over multiple game servers, to create what we call a logical Cluster. The Cluster is a set of game server Nodes that are configured to communicate with each other and which, as a group, make up a scalable backend for a game. The Cluster is a logical concept and may span over multiple Kubernetes clusters, each in a different region of the world. This logical Cluster can span across multiple computers (usually AWS EC2 instances) across one or more physical regions. The Cluster's Nodes are divided into sets that can contain one or more Nodes and are organized by the Cluster topology. The Cluster topology configures the Node sets that form the whole logical Cluster, including in which region each node set should be running, as well as which set of entity kinds each node set is responsible for operating.

The primary way to distribute the workload across the Cluster is by assigning the various entities (based on their kind) to the Node sets of the Cluster. The process of assigning entities within a Node set is called sharding, and the various available sharding strategies are described in Custom Entities.

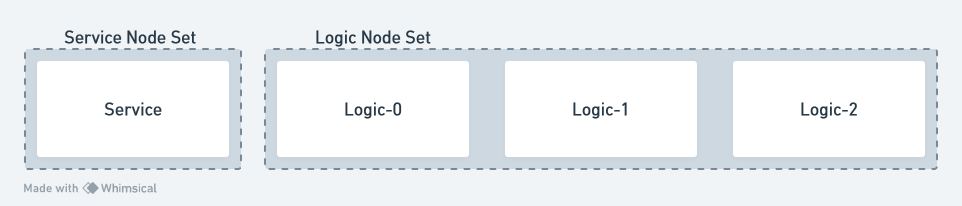

The default Metaplay clustering topology uses an approach with a control and a data plane Node sets:

The service Node set is the control plane of the Cluster. It hosts entities like GlobalStateManager, StatsCollectorManager, AdminApi (for serving the LiveOps Dashboard), various MatchMakerActors, etc. It always consists of a single Node. To assign an entity to this Node set, use NodeSetPlacement.Service on the entity's NodeSetPlacement property.

The logic Node set is the scalable data plane of the Cluster, and it can contain multiple Nodes to allow horizontal scaling of the backend. All scalable entity payloads (where the work is divided into multiple entities) should be placed there by default. To assign an entity to this Node set, use NodeSetPlacement.Logic. It hosts entities like Player, Session, Connection, Guild, Division, InAppPurchaseValidator, etc.

The game server can also always be run in a singleton mode where all the workloads are placed on a single game server instance. This is the default mode when running the game server locally and when getting started with cloud environments.

To customize the Cluster topology, we must first update the shards definition in the deployment's Helm value file. After this, runtime options can be used to override each entity kind's placement on the Cluster by defining the Node sets that the entity kind should be placed on.

Each shard contains the definition of a Node set. The most important parameters are:

| Field | Type | Description |

|---|---|---|

| name | string | The name of the Node Set |

| singleton | true/false | True if server is running in a singleton mode. In singleton mode, there is only one node and the only node runs all Entities and hence does not require defining sharding configuration in server config. |

| admin | true/false | In non-singleton mode, set this to true if entity is running AdminApi entity. AdminApi requests are routed to these nodes. |

| publicWebApi | true/false | In non-singleton mode, set this to true if entity is running PublicWebApi entity. PublicWebApi requests are routed to these nodes. |

| connection | true/false | In non-singleton mode, set this to true if entity is running Connection entity. Client connections are routed to these nodes. |

| public | true/false | If true, a public IP address is allocated for the node |

| scaling | static/dynamic | If static, the node set will have nodeCount nodes. If dynamic, the node set will have minNodeCount to maxNodeCount nodes, controllable by dynamic scaling. |

| nodeCount | 1..N | For scaling: static, the number of nodes in the nodeset. |

| minNodeCount | 1..N | For scaling: minNodeCount, the minimum number of nodes in the nodeset. |

| maxNodeCount | 1..N | For scaling: maxNodeCount, the maximum number of nodes in the nodeset. |

| podEnv | list | The list of environment variables set on the nodes in the node set |

| podEnv[].key | string | The name of the environment variable |

| podEnv[].value | string | The value of the environment variable |

| podPorts | list | The list of custom port forwards |

| podPorts[].containerPort | int | The game server port |

| podPorts[].hostPort | int | The publicly accessible port |

| podPorts[].protocol | TCP/UDP | The protocol to be forwarded |

Here's an example of adding two Node sets, battle and chat, and then placing Battle and Chat entities on these Node sets, exemplifying a dedicated server instances for Battle and Chat workloads.

shards:

...

- name: battle

nodeCount: 1 # one node

- name: chat

nodeCount: 1 # one node# Override the Battle and Chat entities to live on the 'battle' and 'chat' node sets

Clustering:

EntityPlacementOverrides:

Battle: [ battle ]

Chat: [ chat ]To run a Clustered game server locally, you can use the configuration below, which defines a Cluster with a single service Node and two logic Nodes:

[

{

"name": "service",

"hostName": "127.0.0.1",

"remotingPort": 6000,

"nodeCount": 1

},

{

"name": "logic",

"hostName": "127.0.0.1",

"remotingPort": 6010,

"nodeCount": 2

}

]You can then build the game server C# project.

Backend/Server$ dotnet buildYou could, for example, start three copies of the game server, each in its own terminal window and with its own unique options:

Backend/Server$ dotnet run --no-build -RemotingPort=6000 -ShardingConfig="Config/LocalClusterConfig.json"

Backend/Server$ dotnet run --no-build -RemotingPort=6010 -ShardingConfig="Config/LocalClusterConfig.json" --System:ClientPorts:0=9339 --WebSockets:ListenPorts:0=9380 --PublicWebApi:ListenPort=5560 -EnableMetrics=false

Backend/Server$ dotnet run --no-build -RemotingPort=6011 -ShardingConfig="Config/LocalClusterConfig.json" --System:ClientPorts:0=9340 --WebSockets:ListenPorts:0=9381 --PublicWebApi:ListenPort=5561 -EnableMetrics=false