Appearance

UDP Passthrough

This article describes how to implement a UDP server that can be embedded within a Metaplay backend.

Appearance

This article describes how to implement a UDP server that can be embedded within a Metaplay backend.

Working knowledge of game server programming - This page provides advanced uses of Metaplay's server tools. If you need some pointers, take a look at Introduction to Entities and Actors.

Familiarity with runtime options - This guide assumes you have some familiarity with runtime options. If in doubt, check out Working with Runtime Options.

Self-hosted Infra Stack - Custom UDP connections on the Metaplay Managed Service are currently an experimental feature, and you need to contact Metaplay for access. If you are self-hosting, refer to Metaplay's Terraform module documentation.

Accessing the Repository

Metaplay's Terraform modules are available in the private metaplay-shared GitHub organization. Please reach out to your account representative for access to it.

A UDP passthrough is a mechanism that allows custom UDP servers to be embedded within a Metaplay backend, and it supports both local and cloud deployments transparently. Using a UDP server in conjunction with the Metaplay backend is an advanced use case that's not necessary for most games, but it can be useful if you already have a UDP server implemented for your game and wish to integrate it with Metaplay.

As an example, we'll integrate a minimal echo UDP server into Metaplay. Here are the steps we'll go through:

First, we'll create a separate C# project called ExampleUdpEchoServer to define the UDP server:

namespace ExampleUdpEchoServer

{

public class Server

{

readonly int _port;

readonly string _identityString;

UdpClient _socket;

int _numBytesTransferred;

public Server(int port, string identityString)

{

_port = port;

_identityString = identityString;

}

public Task InitializeAsync()

{

_socket = new UdpClient(_port);

return Task.CompletedTask;

}

public async Task ServeAsync(CancellationToken ct)

{

// Reply to every packet

for (;;)

{

UdpReceiveResult msg = await _socket.ReceiveAsync(ct);

string replyStr = System.FormattableString.Invariant($"Reply from {_identityString}. Received {msg.Buffer.Length} bytes.");

byte[] reply = System.Text.Encoding.UTF8.GetBytes(replyStr);

_socket.Send(reply, endPoint: msg.RemoteEndPoint);

_numBytesTransferred += reply.Length;

}

}

public int NumBytesTransferred => _numBytesTransferred;

}

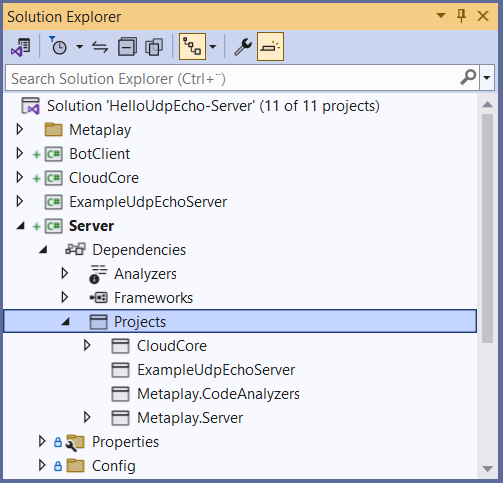

}The next step is to add the project to the backend solution. Then, for the Server project, we'll add a Project Reference to the custom project. In our example, this results in the following structure:

For the custom server to integrate into Metaplay's backend lifecycle, it must be encapsulated into an entity. We can fulfill this by creating a host actor that inherits from the SDK class UdpPassthroughHostActorBase. In our echo sample, this results in the following entity actor:

class UdpHostActor : UdpPassthroughHostActorBase

{

ExampleUdpEchoServer.Server _server;

protected override async Task InitializeSocketAsync(int port)

{

_server = new ExampleUdpEchoServer.Server(port, identityString: _entityId.ToString());

await _server.InitializeAsync();

}

protected override async Task ServeAsync(CancellationToken ct)

{

await _server.ServeAsync(ct);

}

}Pro tip!

There is at most one UdpHostActor running on each backend server process. This means you can use global state in the custom server implementation.

In Options.local.yaml, add:

UdpPassthrough:

Enabled: true

LocalServerPort: 1234The integrated UDP server should now be running in local singleton server mode. We can verify that the integration works by starting the server and testing locally against 127.0.0.1:1234 or whichever port you configured in the previous step.

To avoid hard-coding ports and to programmatically find the UDP server gateway, we use the UdpPassthroughGateways helper, which works in all deployment types:

UdpPassthroughGateways.Gateway[] gateways = UdpPassthroughGateways.GetPublicGateways();

UdpPassthroughGateways.Gateway chosenGateway = gateways.PickRandom();

TestEchoWith(chosenGateway.FullyQualifiedDomainNameOrAddress, chosenGateway.Port);With the implementation running locally, it's time to set up the necessary cloud configurations. For the cloud deployment, we need to declare the port in the game server shard's Helm values as follows:

# Enable the new Metaplay Kubernetes operator.

experimental:

gameserversV0Api:

enabled: true

# Make shards public and open a port

shards:

- name: logic

nodeCount: 1

public: true

podEnv:

- name: Metaplay_UdpPassthrough__CloudPublicIpv4Port

value: "1234"

podPorts:

- containerPort: 1234

hostPort: 1234

protocol: UDPIn this example, we make the logic nodes' UDP port 1234 publicly accessible. The public: true annotation makes the game server pod request a public IP when deployed in the cloud. The podEnv defines the extra environment variables for the game server. We use this to set a well-known variable Metaplay_UdpPassthrough__CloudPublicIpv4Port to enable the game server-side UDP passthrough system on these nodes for the chosen port.

And finally, with podPorts, we actually expose the desired port. The containerPort defines the port on the game server the process listens to, the hostPort the publicly visible port the clients connect to, and the protocol chooses the UDP protocol. Unless port remapping is needed, these two ports should be the same. If the values are different, hostPort should match the value set in Metaplay_UdpPassthrough__CloudPublicIpv4Port, and containerPort the field set to UdpPassthrough:LocalServerPort earlier.

Environment Variables as Options

The Metaplay_UdpPassthrough__CloudPublicIpv4Port environment variable is mapped as if it were the UdpPassthrough:CloudPublicIpv4Port options segment in Options.<env>.yaml. You may define any options field with environment variables, but it's recommended to use the Options.<env>.yaml files where possible to avoid scattering config in other systems.

If the server is not running in singleton mode, i.e., if there are multiple server nodes, you must define the nodes on which the UDP passthrough should run. You can do this by updating the ShardingTopologies in Options.base.yaml. For example, to run the UDP server on all logic nodes, we would define:

Clustering:

ShardingTopologies:

MyTopology:

# Run it among the logic nodes

logic:

...

- UdpPassthroughThe HostActor is an entity, and it can communicate with other entities in the game with normal MetaMessages and EntityAsks. To demonstrate, we'll implement a minimal AdminApi controller that allows inspecting the custom server's NumBytesTransferred, which is the total number of bytes sent.

First, let's add EntityAsk messages to inspect the state of UdpEchoServer:

public static class MessageCodes

{

...

public const int UdpEchoServerStatusRequest = 18104;

public const int UdpEchoServerStatusResponse = 18105;

}[MetaMessage(MessageCodes.UdpEchoServerStatusRequest, MessageDirection.ServerInternal)]

public class UdpEchoServerStatusRequest : EntityAskRequest<UdpEchoServerStatusResponse>

{

}

[MetaMessage(MessageCodes.UdpEchoServerStatusResponse, MessageDirection.ServerInternal)]

public class UdpEchoServerStatusResponse : EntityAskResponse

{

public int NumBytesTransferred;

UdpEchoServerStatusResponse() { }

public UdpEchoServerStatusResponse(int numBytesTransferred)

{

NumBytesTransferred = numBytesTransferred;

}

}class UdpHostActor

{

...

[EntityAskHandler]

UdpEchoServerStatusResponse HandleUdpEchoServerStatusRequest(UdpEchoServerStatusRequest request)

{

return new UdpEchoServerStatusResponse(_server.NumBytesTransferred);

}

}Then, let's implement an AdminApi Controller:

public class UdpEchoApiController : GameAdminApiController

{

[HttpGet("udpecho/gateways")]

[RequirePermission(MetaplayPermissions.Anyone)]

public object GetGateways()

{

return UdpPassthroughGateways.GetPublicGateways();

}

[HttpGet("udpecho/status")]

[RequirePermission(MetaplayPermissions.Anyone)]

public async Task<object> GetStatusAsync()

{

int numBytesTransferred = 0;

// Sum data from all servers.

UdpPassthroughGateways.Gateway[] gateways = UdpPassthroughGateways.GetPublicGateways();

foreach (UdpPassthroughGateways.Gateway gateway in gateways)

{

UdpEchoServerStatusResponse response = await EntityAskAsync(gateway.AssociatedEntityId, new UdpEchoServerStatusRequest());

numBytesTransferred += response.NumBytesTransferred;

}

return new

{

NumBytesTransferred = numBytesTransferred

};

}

}Danger: Unsafe Example

udpecho/gateways and udpecho/status are using [RequirePermission(MetaplayPermissions.Anyone)] instead of [RequirePermission(GamePermissions.ApiMyPermission)] and therefore allow anyone with access to the LiveOps Dashboard to access these APIs. Real-world controllers should always require authorization.

Now, accessing api/udpecho/gateways shows the set of public domain-port combinations of the running UDP Passthrough gateways, and api/udpecho/status allows us to observe the total number of bytes transferred. Note that in the real world, exporting metrics of transfer amounts should be done with Prometheus.Counter.

To help debug network and configuration issues, the Metaplay backend contains a UDP debug server. In Options.base.yaml, enable UseDebugServer as follows:

UdpPassthrough:

Enabled: true

LocalServerPort: 1234

+ UseDebugServer: trueThis replaces the UDP server with a built-in UDP debug server. In our example, the UdpHostActor would be replaced with the debug implementation. With the debug server in place, we can test connectivity by sending it UDP messages and inspecting responses. Here's an example using a Python script:

import socket

def ask(msg, ip, port):

try:

s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

s.sendto(msg.encode("utf-8"), (ip, port))

s.settimeout(5)

(reply, addr) = s.recvfrom(4096)

print("reply: ", reply.decode("utf-8"))

finally:

s.close()

host = input("host:")

port = int(input("port:"))

ipv4 = socket.gethostbyname(host)

ask("whoami", ipv4, port)We would get the following response:

host: lovely-wombats-build-quickly-udp.p1.metaplay.io

port: 9001

reply: You are 88.88.88.1:64607. I am logic-1.lovely-wombats-build-quickly-udp.p1.metaplay.io, entity UdpPassthrough:0000000002, local port 1234. LB is "lovely-wombats-build-quickly-udp.p1.metaplay.io".You can use ask("help", ipv4, port) for more details.