Appearance

Optimizing Experiments

Experiments are a tool to conduct controlled studies of how changes to your game configs might affect your players.

Appearance

Experiments are a tool to conduct controlled studies of how changes to your game configs might affect your players.

The simplest approach to experiments is to only run one experiment at a time. This would give you clearer results and avoid any potential performance issues. If, however, you want to run multiple experiments at a time, there are a few things to keep in mind.

The Metaplay SDK allows you to define as many experiments as you like - so what happens if you have more than one running at the same time? The answer is straightforward: if a player is enrolled in multiple experiments, then they will see all of the Variants that they have been assigned from all of the experiments. For example, a player might be taking part in one experiment where they see stronger enemies on level one of your game, and another experiment where they see more powerful weapons. In this case, the player will see both stronger enemies and more powerful weapons.

While this answer is straightforward, the consequences are not. For example, how will you be able to measure the results of the stronger enemies experiment if some of the players also have more powerful weapons? The answer depends on how much the experiments overlap, how able you are to extract the relevant data from your analytics system, and how well you are able to analyze it.

Another possible pitfall is if a player is in two experiments that both alter the same variables. For example, one experiment could make level one enemies stronger while another makes all enemies weaker. If a player is in both experiments, what will the effect be on level one enemies? Because Variants apply their changes as absolute values rather than relative, the answer will depend on the order that the Variants are applied to the player - the player may have weaker or stronger enemies. Similarly, experiments alter the whole config item instead of individual values. For example, one experiment could make an enemy stronger while another makes the same enemy have more life. If a player is in both experiments, only one of the experiments will apply to that monster, meaning the monster is either stronger or has more life. For convenience, we mark config items in the dashboard with a V or a P badge, V indicates that the value has changed while P indicates that a value is part of a patch but is identical to the baseline.

The order in which the changes are applied is the same order as the Experiments are defined in the Game Config - early changes in the Config will always be overwritten by later changes. As this can cause unexpected behavior, we recommend not running parallel experiments on the same values.

Because of these potential downsides, we strongly recommend that you ensure that players can only be enrolled in a single experiment at a time unless you are confident that the experiments do not contaminate each other. It might not always be an issue - sometimes it's OK for a player to be in more than one experiment. In that case, you want to avoid overlapping experiments.

There are a few ways to avoid a player being in multiple experiments:

This section explains why using MetaRef within config data can cause increased server memory usage when using overlapping Experiments, and how to address this problem. Note that this problem only concerns the server, not the client, because the client only ever deals with one player's game config at a time.

The brief explanation is that because MetaRefs contain concrete in-memory references at runtime, they diminish the server's ability to reuse unmodified game config item instances across different experiment combinations. This causes the server to duplicate more config items in memory than it otherwise would. The problem tends to become especially severe if the config contains long MetaRef reference chains. For a more detailed explanation of the implementation and the problem, see section Config Memory Sharing Explanation.

To help you recognize this problem on your servers, the SDK produces metrics about the amount of config item duplication. See Game Config Duplication Metrics for more information.

The SDK also has a Config Reference Analyzer tool in the Unity Editor for exploring the MetaRefs within the game config and the duplication they cause. This tool can help you identify particularly troublesome MetaRefs.

A way to reduce the duplication is to replace select MetaRefs with MetaConfigIds, which do not cause duplication. See Using MetaConfigId Instead of MetaRef.

In addition to considering MetaRefs, keep in mind that an additional way to limit experiment-related memory usage is Avoiding Overlapping Experiments.

This section describes how the server keeps game configs with multiple experiments in memory and how MetaRefs affect that.

Each player can belong to a number of experiments, and in each of those experiments to some Variant (or the control group). The combination of the Variants (or control group) the player is in determines the specialization of the game config used for the player. For example, the combination of Variants EnemyStrengthExperiment/StrongerEnemies and WeaponPowerExperiment/MorePowerfulWeapons is one specialization, and the combination of Variants EnemyStrengthExperiment/WeakerEnemies and WeaponPowerExperiment/MorePowerfulWeapons is a different specialization.

For each player online, the server needs to have the correct game config specialization in memory. The more you have active experiments (and variants in them) and the more overlap there is between them, the more possible specializations there are. Each unique specialization uses some amount of memory; however, the server does not generally keep a full copy of the entire config in memory for each specialization. In the best case, when a Variant only modifies specific game config items, only those config items will need to be duplicated in memory. Unmodified items can reuse the item instances from the baseline game config.

Furthermore, a multi-Variant specialization can reuse item instances from not only the baseline game config but from the individual Variants. Ideally, therefore, a multi-Variant specialization can get each of its items from either the baseline or one of its constituent Variants without needing to make any new copies of the config items.

However, this is complicated by the presence of MetaRefs, which are concrete memory references between game config items. Consider a config item Referrer which contains a memory reference to config item Target (i.e., contains a MetaRef rather than containing just Target's ID or MetaConfigId).

Now, consider a Variant which directly modifies Target but does not modify Referrer; thus, Target needs to be duplicated in memory for this Variant. But due to the memory reference, there is a problem involving Referrer: which in-memory instance of Target should Referrer refer to? If it refers to the baseline instance of Target, that is the wrong instance of Target for players belonging to the Variant. But if it refers to the Variant instance of Target, then that is the wrong instance of Target for players using the baseline config instead of the Variant. Therefore, Referrer needs to be duplicated as well: one copy where it refers to the baseline instance of Target, and another copy where it refers to the Variant instance of Target.

In this manner, MetaRefs can propagate the config item duplication to config items that are not directly modified by Variants. The propagation starts from directly modified items and follows the MetaRefs in reverse direction, all the way to transitively referring items.

What's more, this duplication is not constrained to individual Variants. If an item refers to more than one item, it may need to be duplicated for each multi-Variant specialization that modifies the items to which the item refers. This multi-Variant duplication is the primary contributor to significant memory usage for each unique specialization.

The magnitude of the duplication propagation due to MetaRefs can vary greatly depending on the structure of the game config's internal references. In particular, if your config contains long reference chains, that can cause significant duplication. See RoomInfo and NextRoom Reference Example.

For an example of a long reference chain, imagine you have a config item class RoomInfo which describes a room on the game map. The rooms have dependencies such that completing a room unlocks the next room. You could choose to represent this dependency with a NextRoom reference within the RoomInfo class itself.

[MetaSerializable]

public class RoomInfo : IGameConfigData<RoomId>

{

[MetaMember(1)] public RoomId Id;

[MetaMember(2)] public string Name;

[MetaMember(3)] public MetaRef<RoomInfo> NextRoom;

public RoomId ConfigKey => Id;

}The config sheet could look like this. This example has just one long chain of rooms. The part with "..." represents rows omitted here for brevity.

| /Variant | Id #key | Name | NextRoom |

|---|---|---|---|

| room1 | Room 1 | room2 | |

| room2 | Room 2 | room3 | |

| room3 | Room 3 | room4 | |

| ... | ... | ... | |

| room198 | Room 198 | room199 | |

| room199 | Room 199 | room200 | |

| room200 | Room 200 | ||

| RoomTest/V0 | room200 | Room 200, modified |

Now, even though the Variant RoomTest/V0 modifies just the final room, room200, it will cause all of the other rooms to be duplicated in memory because room200 is reachable via the reference chain from any other room.

If your servers are experiencing high memory usage, particularly when running several overlapping experiments at the same time, it's worth investigating if this is caused by game config duplication.

The game server produces real-time metrics about this. You can see these metrics in your "Metaplay Server" Grafana dashboard, which you can typically reach via the link in the "Cluster Metrics & Logs" card on the front page of the LiveOps Dashboard.

In the Grafana dashboard, section In-Memory Game Config Cache contains a few graphs about the game configs that the server is keeping in memory.

If the Average Item Duplication Per Experiment Specialization is high (such as in the thousands), it is often possible to mitigate the problem by identifying the most problematic cases of MetaRef usage and eliminating those MetaRefs. See the following sections Config Reference Analyzer and Using MetaConfigId Instead of MetaRef.

Simply knowing that game config duplication is taking place is a good start, but in order to address the problem, you'll want to know which MetaRefs are causing the most problems. Typically, these are MetaRefs that are involved in long reference chains between config items. Perhaps you are very familiar with how your game config is structured and can guess where those chains are, but the Metaplay SDK has a tool in the Unity Editor to help discover them.

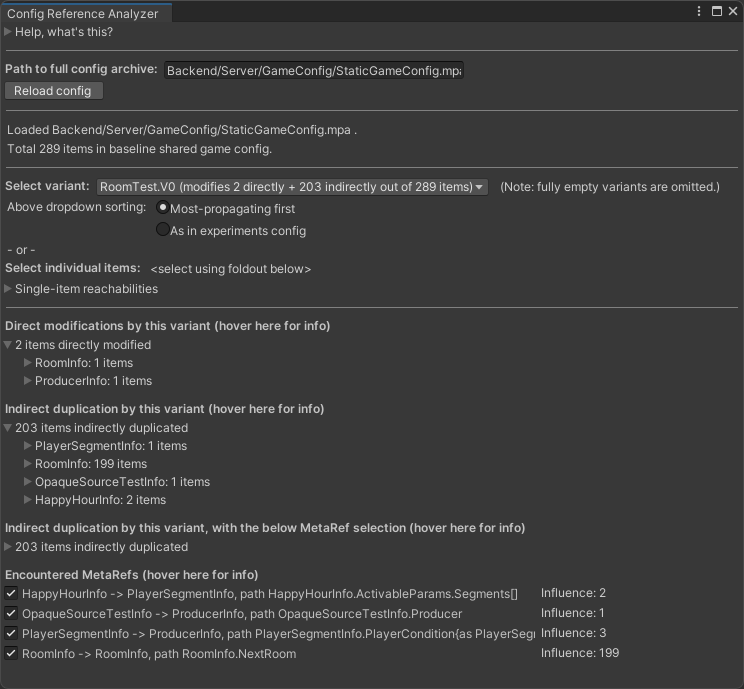

You can open the tool in the Unity Editor menu: Metaplay -> Config Reference Analyzer.

The first thing you should do is check that the Path to full config archive is correct. The default path is correct for most project setups, but you may need to adjust it if you see a "Could not find file" error. This should be the path to the StaticGameConfig.mpa file produced by the config build.

It may take some time for the analyzer to load the config if it's large, even minutes.

Once the config has been loaded, you can select a Variant from the Select variant dropdown. Selecting the topmost one is a good bet, since by default they're sorted by how much item duplication they involve (also called "indirect modification" in the tool).

In this example scenario, we have selected Variant V0 from experiment RoomTest. We're using the config data from the RoomInfo and NextRoom Reference Example. In addition to the RoomInfo config items, we've also included some unrelated config content in this example for demonstration purposes, because real game configs will also have lots of content that is not actually involved in duplication problems.

The analyzer tells us that this Variant directly modifies only 2 items (room200 from the earlier example and some unrelated item). However, 203 items end up being indirectly duplicated. The majority of those are the 199 other rooms that transitively refer to the modified room200.

To explore specific MetaRefs, let's look at section Encountered MetaRefs. It lists every MetaRef causing duplication in the selected Variant. Each row in the list shows the referring type, the target type, and the member path where the MetaRef is found inside the referring type. Additionally, it shows the "influence" of each item, which is an indicator of how much that MetaRef contributes to the duplication. The analyzer tool discovers MetaRefs by performing a graph search with config items as nodes and reversed MetaRefs as edges; the influence number of an edge is simply the number of nodes the search reached by that edge.

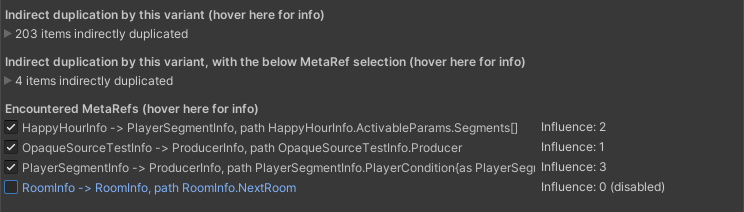

In this example, we can easily see that RoomInfo.NextRoom is the most influential MetaRef. Let's untick the box to see what would happen if this MetaRef did not exist.

In section Indirect duplication by this variant, with the below MetaRef selection, we can see that the number of duplicated items in this Variant would drop to 4 from the previous 203 if we were to remove the MetaRef at RoomInfo.NextRoom. We can use this discovery to decide to refactor this MetaRef into a MetaConfigId, which does not suffer from the duplication problem. See Using MetaConfigId Instead of MetaRef.

Note that your real game config will likely be more complex than this example. In particular, the reference chains don't necessarily consist of just one MetaRef from a config item type to itself; it may involve multiple types referring to each other. Furthermore, the MetaRefs may exist at deeper locations within the config item types, rather than a simple top-level member like RoomInfo.NextRoom.

Finally, be aware that disabling a MetaRef can cause the influence number of other MetaRefs to increase, because the tool's graph search may end up traversing different MetaRefs now that the disabled MetaRef is not available. Therefore, a MetaRef can actually be significant even if it initially shows a low influence; you generally need to do some exploration with the tool, disabling some of the highest-influence MetaRefs one by one, considering the changes in the influences. The goal is to discover a feasible set of MetaRefs to remove that reaches a low duplication count.

One effective way to limit the duplication of configs is to use MetaConfigId instead of MetaRef. This approach involves a different method of resolving references. MetaConfigId is resolved at usage time by providing a game config resolver, the current game config, to the GetItem(...) method of the MetaConfigId. Unlike MetaRef, the MetaConfigId remains a simple config ID in memory and is not mutated in place, but the resolver is required whenever you want to retrieve the referenced item.

You don't have to replace all of your MetaRefs with MetaConfigIds; you can selectively use one or the other. The most significant reduction in duplication is achieved by using MetaConfigId in game config classes where long reference chains have been identified, as explained in section Config Reference Analyzer. This change results in the references being stored in memory as config IDs instead of as concrete references to items like with MetaRef.

Define MetaConfigId in Your Config Classes: Replace instances of MetaRef with MetaConfigId in your game config classes. For example, if you have a RoomInfo class that references the next room, you would change the type of the reference member:

[MetaSerializable]

public class RoomInfo : IGameConfigData<RoomId>

{

[MetaMember(1)] public RoomId Id;

[MetaMember(2)] public string Name;

[MetaMember(3)] public MetaRef<RoomInfo> NextRoom;

[MetaMember(3)] public MetaConfigId<RoomInfo> NextRoom;

public RoomId ConfigKey => Id;

}MetaConfigId has the same serialization format as MetaRef, so this change does not cause compatibility problems with existing serialized data.

Resolve MetaConfigId at Usage Time: When you need to access the item referenced by a MetaConfigId, you need the current game config to act as the resolver. The item is then fetched from the game config using the config ID and the type information in the MetaConfigId. Here's an example of getting the item using MetaConfigId.GetItem() in PlayerModel:

RoomInfo GetNextRoom(RoomInfo currentRoom)

{

// Get the referenced item using the current game config

RoomInfo nextRoom = currentRoom.NextRoom.Ref; // MetaRef

RoomInfo nextRoom = currentRoom.NextRoom.GetItem(GameConfig); // MetaConfigId

return nextRoom;

}You can always access the current game config through the PlayerModel. In client-only code, such as in UI, you can typically access MetaplayClient.PlayerModel.GameConfig.

Note that if the current game config does not contain the referenced item, MetaConfigId.GetItem() will throw an exception. If you expect that the reference might be invalid at resolve time, you can alternatively use MetaConfigId.TryGetItem(), which will return null if the reference is invalid. When the MetaConfigId member itself is contained within game config data, this is not a concern, because config-internal MetaConfigIds are validated already when you build the game config; you can safely call GetItem() in such cases. TryGetItem() is intended for cases where the MetaConfigId member is stored elsewhere, such as in PlayerModel, and there is a possibility that a future config will no longer have the referred item.